A PhD project by Dr. Thomas Fankhauser with the

University of the West of Scotland and

Stuttgart Media University

Web Scaling Frameworks

Building scalable, high-performance, portable and interoperable Web Services for the Cloud

A PhD project by Dr. Thomas Fankhauser with the

University of the West of Scotland and

Stuttgart Media University

Building scalable, high-performance, portable and interoperable Web Services for the Cloud

Through social traffic, smart health and the Internet of Things humans create increasingly more data. To process this data, complex cloud computing systems need to be set up manually by each customer and for each provider. In the project, a framework is proposed that automatically sets up, manages and scales cloud resources efficiently and in an optimised fashion.

The major optimisation schemes are an optimised request flow that distributes requests to different subsytems based on their HTTP verb. GET and HEAD requests go to the read subsystem, where all other requests go to a processing subsystem. Further, resource dependency processing tracks dependencies between all requestable resources and processes updates in a distributed fashion with optimised processing trees. All this functionality is put into a Web Scaling Framework so it can be reused by multiple web services.

The amount of data to process continuously increases. At the same time, results of these processings are most valuable close to their collection. While cloud computing provides the resources for fast processing, state-of-the-art web applications need to be customised to utilise cloud offerings. Web Scaling Frameworks are proposed to reduce this costly customisations.

A complete storage of all requestable resources and tracking of their exact dependencies can enable a more efficient processing, access and scaling performance. In general, it makes sense to process time-critical actions when time is available: on write. Due to client logic, eventual consistency in the web is possible and allows for optimised processing in the background.

The work proposes to not use cache eviction but an exact processing and definition of dependencies between all resources. This is close to a static site generation approach that recreates sites on updates. Thereby, all resources are ready to deliver at any point in time.

The following is a list of the major findings from the project. To put them in context, please refer to the full thesis.

The major design goal for Web Scaling Frameworks is to create an architecture that enables to build maintainable, automatable, scalable, resilient, portable and interoperable implementations of WSFs.

The cloud on the left side of the Figure presents the logical structure of a WSF, where the modules within this cloud implement the core functionality of a WSF. The right side of Figure shows the components that are managed by a WSF. The components provide services and functionalities needed to operate a full web application. One type of component is the worker component at (g), which hosts the application logic that is implemented with the help of a WAF. The worker component joins a WSF with a Web Application Framework, where the worker logic is implemented by the WSF and the application logic is implemented by the WAF.

The illustrated composition of components in (a-e) is created with two major design goals: optimised performance and enhanced scalability. The approach to optimising the performance is to minimise the request flow graph for every request.

The Figure at (a-e) illustrates the detailed flow of a request through the components: Requests enter the system through multiple load balancers LB at (a). The load balancers LB forward the request to one of the dispatchers D. The dispatcher D now decides whether the request is a read request RR or a processing request RP. Read requests RR are determined by the HTTP methods GET, HEAD and OPTIONS that do not have any side effects. Processing requests RP are requests with all other HTTP methods that by definition change content on the server. With this implementation, both subsystems can be scaled independently on a component level.

Keeping all resources updated in the resource storage requires the declaration of dependencies between all resources as a directed acyclic graph (DAG). For an efficient processing of dependencies the problem is formulated as follows: Given a vertex v from a dependency graph DG, how long does it take to process all dependencies of v while ensuring correct processing order.

By topologically sorting a DAG, a linear ordering of vertices is generated guaranteeing a vertex v1 to come before a vertex v2 if an edge v1 → v2 exists. If dependencies are processed by the calculated order, it is guaranteed all changes are reflected in all dependent resource vertices. The approach can be optimised further by finding branches of jobs eligible for parallel processing as their outputs do not depend on other resources. The project therefore develops a topological sort algorithm that applies dynamic programming to extract a forest of optimal processing trees from a dependency graph.

The overall performance of a web application integrated into a WSF is a trade-off among optimised processing cost, processing duration and storage space. The Figure shows a SPD (read speedy) performance optimisation triangle in the style of the CAP theorem. The triangle illustrates how a system can only be optimised for two out of three goals simultaneously.

A traditional web application with vertical scaling typically requires low storage space S as it caches only parts of all resources. It further can achieve low processing durations D by scaling the system vertically, e.g. by adding a faster CPU or better network. However, it can not exhibit low processing cost P as vertical scaling is more expensive than horizontal scaling and cache misses need to be processed.

A traditional web application with horizontal scaling also requires low storage space S due to partial caching. It further can achieve low processing cost P by employing multiple, inexpensive machines with low hardware specifications. However, with these low hardware specifications it can not achieve low processing durations D, with the same number of machines than SD uses.

A web application using resource dependency processing can provide low processing durations D as all requestable resources are preprocessed and immediately available for delivery. It further can achieve low processing cost P as it processes only requests that require processing and does not evict and reprocess resources to save storage space. Consequently, it can not achieve the goal for low storage space S.

The following is a list of work that has been reviewed, presented and published at research conferences or in journals.

Fankhauser, T., 2016

University of the West of Scotland, PhD Thesis

Fankhauser, T., Q. Wang, A. Gerlicher and C. Grecos, 2016

IEEE Transactions on Services Computing, Journal Paper

Fankhauser, T., Q. Wang, A. Gerlicher, C. Grecos and Wang, X., 2015

IEEE Transactions on Services Computing, Journal Paper

Fankhauser, T., Q. Wang, A. Gerlicher, C. Grecos and Wang, X., 2014

IEEE International Conference on Communications, Sydney, Conference Paper

The following is a list of animations and videos created throughout the project.

See the request routing to different subsytems and scaling to an optimal number of machines in action.

See the resource dependency processing for a dependency graph in action.

Resource Dependency Processing Animation

Generate a random resource dependency graph and extract all optimised dependency processing trees.

Shows the differences between a traditional and a resource dependency processing approach with a Twitter-like web application named LinkR.

The following is a list of talks and presentations given in the course of the project.

Viva presentation held at University of the West of Scotland in 2016.

Presentation held at the Annual Research Conference at the University of the West of Scotland in 2015.

UWS Annual Research Conference Presentation

Presentation held for the Transfer Event at the University of the West of Scotland in 2014.

Presentation held at the IEEE International Conference on Communications 2015 in Sydney, Australia.

The initial research project pitch presentation that started the project in 2013.

The following data traces and samples were used to evaluate the models created throughout the project.

For the evaluation the following traffic traces were used: social.csv.zip trip.csv.zip

For the evaluation, the following resource dependency graphs were used: fuzzy-resources.tar.gz fuzzy-results-requestor.tar.gz fuzzy-results-sequencer.tar.gz service-based-resources.tar.gz service-based-results-requester.tar.gz service-based-results-sequencer.tar.gz service-based-traffic.tar.gz service-structure-graphs.tar.gz

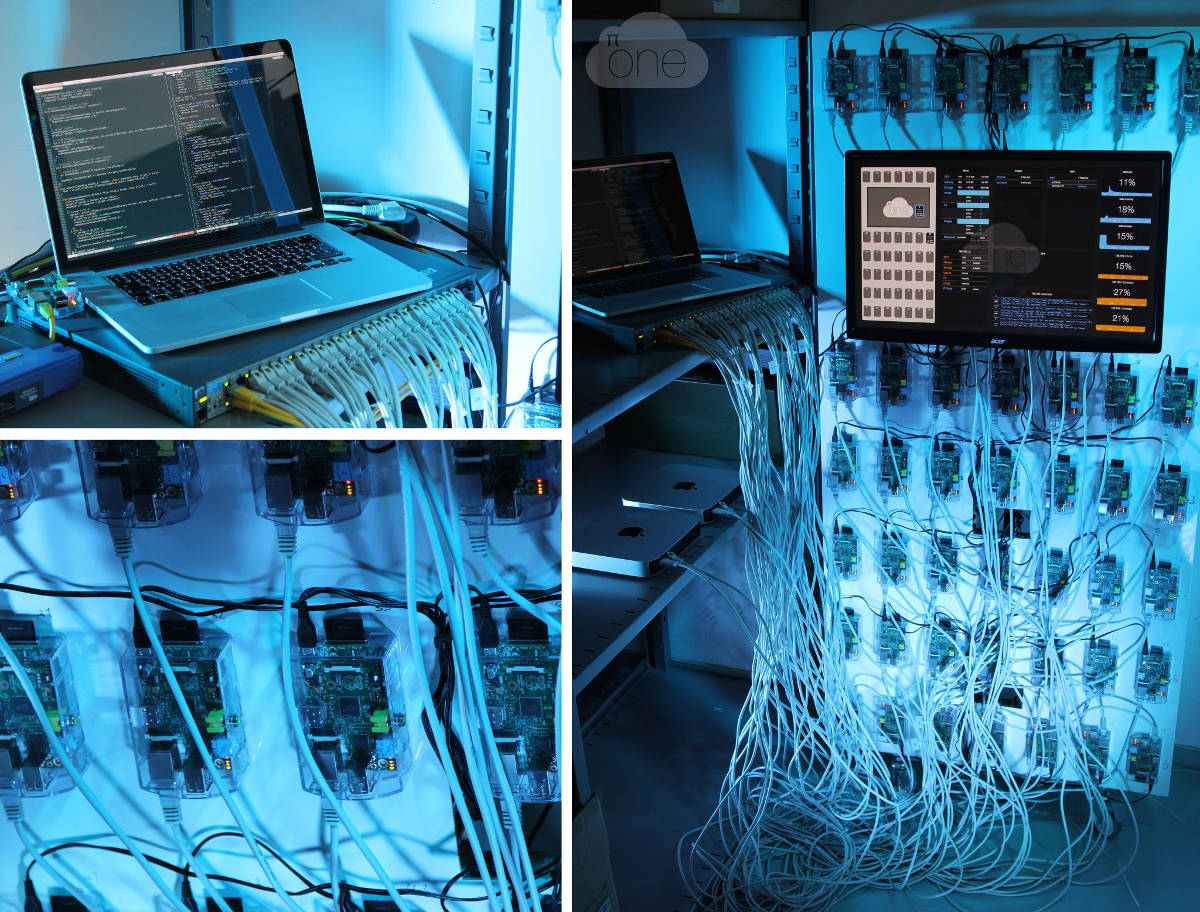

Most of the evaluations were performed on the Pi-One, a cluster of 42 Raspberry Pi computers.

If you are interested in the project or have an interesting performance problem you need to solve, do not hesitate to get in touch with me by mail tommy@system8.io.

Dr. Thomas Fankhauser

Karl-Rebmann-Str. 12

74189 Weinsberg

Germany

Big thanks to my supervisors Prof. Dr. Qi Wang, Prof. Dr. Ansgar Gerlicher and Prof. Dr. Christos Grecos for their fantastic support and to Prof. Dr. Dimitrios Pezaros and Prof. Dr. Feng for examining the thesis.

webscalingframeworks.org is a private, non-commercial website. Your privacy is respected by not collecting any data.